Drawing shadows

Rendering “Blob” Shadows

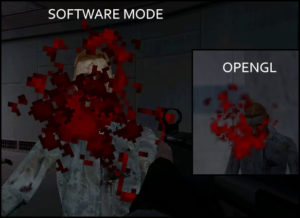

Resident Evil had simple circular shadows underneath every enemy and player. Replicating that in Half-Life sounded like a perfect job for the “TriAPI” as we’d just be needing a quad underneath all enemies using a simple sprite texture to represent the shadow. Unfortunately, this proved to be quite complicated, as the shadow not only needed to be black, but also an “additive” type render mode to allow for gradient style edges instead of a hard border. This cannot work, as additive render blending treats darks as invisible (like the Overlay render mode in Photoshop)

This meant I had to do a bunch of OpenGL calls to try and get the blending function I desired. This actually look at lot longer than it should have done, as I still fundamentally don’t particularly understand OpenGL calls, having never really done anything with them before. My first attempt was iterating through all entities (with an arbitrary for loop – 0 to 2048, casting to an entity for each one and null-checking, then seeing if their movetype was _step or _walk, which identifies a player or NPC), then performing the call to TriAPI right then and there for drawing the shadow underneath. This did work in my test map (so far that it rendered a quad under their feet) but it wasn’t until I tested it in a map with more than one NPC that I realised that what I was doing was wrong. I was doing this drawing work at an arbitrary point in time, which meant that I had all sorts of strange issues with the shadows disappearing randomly, using the wrong texture or simply not showing up at all.

I settled for a global vector that stores all potential shadows for that frame by pushing back the entity’s origin in StudioModelRenderer. Then, in HUD_DrawTransparentTriangles I simply iterate through the vector, draw my shadows one by one and then clear the vector at the start of the next frame. This fixed all problems, and also allowed me to do further checks (for example, I could distance cull) – However, this revealed another problem; NPC movement in GoldSource is rather janky if you use the client-side entity’s origin for drawing the shadows at. The actual origin itself is only updated a few times a second while NPCs are moving, which makes the shadow teleport forward a lot. This looked very odd. Thankfully, the current position on the frame of the model itself (meaning its animated, interpolated visual representation of where the NPC is) is available in StudioModelRenderer. I used StudioSetUpTransform and grabbed modelpos directly from there and pushed that into the shadow vector instead. This fixed the problem instantly, and now shadows move smoothly with their model.

However, I still had the issue of not being able to find the right glBlendFunc to draw the shadow as a dark shape on the map. I tried a bunch of solutions, such as using a function I had no idea of (getSurfaceBase()->createNewTextureID()) which would create a new texture and return its ID. I was then loading TGAs straight after in VGUI and assuming they would be the index of createNewTextureID() + 1, but this is wrong. Half-Life does not register VGUI textures into indices until PaintBackground is called (I believe) on the element, meaning you can’t reliably know a VGUI texture’s ID, as far as I know. I tried a bunch of things, eventually throwing it all out and returning back to using a sprite. I created a black shadow Additive sprite and realised I didn’t understand which argument of glBlendFunc represented what (IE, was the first argument the background blend mode or the foreground) so I set up some code, out of frustration, to cycle through all potential blend modes. After a while I found something that finally worked:

glBlendFunc(GL_ZERO, GL_ONE_MINUS_SRC_COLOR);

Graphically, it still needs work, but I’m fairly happy with the end result regardless.